How to discover all the data sources, low-fuss way

Sometimes you need to understand what databases and data sources your company uses. I’d like to show how I do that. I use a small spreadsheet that lists all the data sources, with just half a dozen questions about each data source.

This is mainly a personal tool: it will help you to organize your thoughts if you need to have a discussion with somebody, or with your team.

Let’s create this list of data sources. We’ll include both sources of truth and derived data sources. Also, we may include third-party data sources, not controlled by your company.

For each data source, we’ll write down the answers to the following questions:

Q1. Where is it stored?

Q2. Is it a copy of another dataset?

Q3. Why did we decide to create it?

Q4. How is it designed?

Q5. Where is it documented?

This list can help you better understand:

How many data sources you actually have: some of the items in this list may become a surprise;

Why are you paying for this database? You may either find a definitive answer, or find out that this database could actually be decommissioned;

How you can improve data processing by cleanly splitting some tables that got clumped together for historical reasons;

Is every component of this system properly owned and maintained? What about backups?

Etc., etc. (see the “Customizing” section at the end of this post).

This list may also be used to improve the speed of onboarding: people would get a more clear picture of the data from the start. You can even use it for your own personal onboarding if you just joined a new company.

Example: Mokum

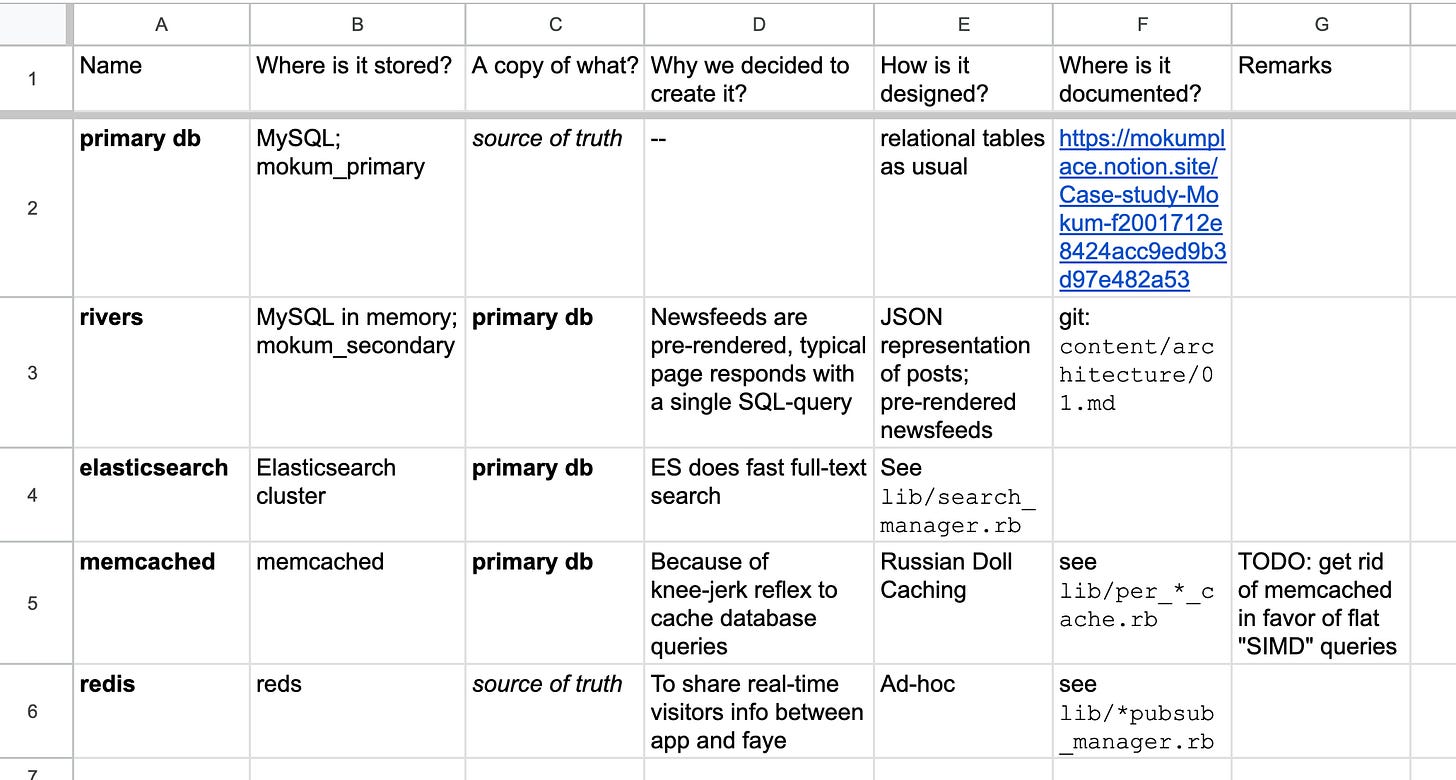

Here is an example of such a list for a small social network.

And here is the graph that shows all five data sources. Arrows connect the data sources that are “a copy of” another data source. Here, for example, “rivers” is a copy of “primary db”, while “redis” is a source of truth.

Each data source has a name. In this example it would be “primary db”, “rivers”, “elasticsearch”, “memcached” and “redis”. Use the names that you and your team would understand.

For example, here we may use the name of the database system, like “memcached”, because that’s clear enough. But if we’d have two instances of Redis, we’d want to use something like “realtime redis” and “control redis”, whatever that would mean.

Or, you may use a purpose of the data source. “Primary db” does not mention MySQL, even though it is stored in MySQL. This is because “rivers” are also stored in MySQL.

Let’s go over the questions and see what we can learn from them.

Q1. Where is it stored?

This one is easy. Write down the server software (such as MySQL, BigQuery, MongoDB, etc.).

Also, provide the name of the database, if needed. For example, here we have two different data sources in MySQL:

MySQL; database name:

mokum_primary;MySQL in memory; database name:

mokum_secondary.

You can list just one data source that covers several databases. For example, suppose that you have two Postgres databases: “inventory” and “orders”. You may decide that for the purposes of this document you need just one item in this list. You can name it “backend databases”.

Why not list each database separately? Sometimes you have many such databases, say a few dozen (a typical situation in service-oriented architectures). They’re all different, but at the core they’re just the “backend data”. Having 30 very similar rows in the list may become less helpful.

Of course, the other choice is also perfectly valid. If you need to distinguish between “inventory” and “orders”, do list them as separate items (and nodes of the graph).

Another thing that you may realize at this point is that you have some external databases that are not under your control. Examples:

Payments information in your payment provider such as Stripe. You have access to this database via their API, but in most cases you need to maintain a local copy of this data, to simplify data processing.

For some industries, a blockchain is such a data source. If you need to analyze the blockchain activity, you may want to load its contents to a relational database to make analytical queries possible.

Data from Google Analytics or similar services. They collect the visits information, and give you some sort of database access to this data.

Financial data such as forex rates. If you have to deal with multiple currencies, you probably use some sort of dataset that provides the up-to-date exchange rates. Most certainly you need to maintain a local copy of this data. Here you will have two items in the list: a third-party database and a table in your database that keeps a local copy of this data.

Please also note that ML models can and should be considered data sources for the purposes of this list. ML model is just a very specialized database, heavily optimized for very specific queries.

Q2. Is it a copy of some other database?

Every item in this list is either a source of truth, or a copy of another data source. In Minimal Modeling, we call it “primary data” and “secondary data”.

How to tell one from the other? If you delete some data (or all data) from the source of truth, you need to restore it from the backups. If you have no backups, the data is lost forever.

If you clobber the data in a secondary database, it may be recovered, you just need to reprocess the data stored in the source of truth.

Your website may even go down if the secondary database is clobbered, but only until the reprocessing is finished. On the ther hand, if the primary database is clobbered, you lose some or all of the business.

What does it mean to be a copy of another database? It may mean:

The data is copied almost verbatim, same tables, same columns and same data. For example, you load the data from your backend database to some sort of OLAP solution, such as Snowflake. This is what ELT (extract, load, transform) often does.

The data may be post-processed as much as needed.

Creating flat pre-joined tables from several source tables;

Aggregating data: e.g., sales data summarized by date/region/customer segment;

Unifying the way the data is stored. E.g., you may want to create a physical storage based directly on 6NF/anchor modeling; getting rid of JSON-typed columns etc.

Secondary database can also be a copy of another secondary database. For example, in the “forex rates” example, we may have the following sequence of copies:

Source of truth: third-party provider, accessible via API, which is

copied to: a table in the backend database, which is

copied to: a table in the data warehouse.

How is the data copied? There are two main scenarios:

Some sort of ELT (extract-load-transform) script is running; a very common situation;

The data is loaded on-demand, effectively serving as a cache. For example, memcached is the go-to solution for this.

Each secondary data source has some sort of propagation delay. When a piece of data changes in the primary database, it always takes some time until it is reflected in the secondary data. Recording the propagation time could provide useful insight.

Q3. Why did we decide to create it?

For primary data this question is easier to answer. Just write down what kind of information is stored there.

Each secondary database is created to make something faster. We trade disk space for query speed, and sometimes for convenience. So, here you have to write down, what does this data source make faster.

For example:

We copy the data from various backend databases to some sort of data warehouse because then we are able to join any table with any table. If two tables are in different databases, you can’t join them directly, you must use some sort of client-side JOIN. With data warehouse, all the data is effectively in a single database.

Another reason why data warehouse solutions may be faster is because they can dynamically allocate server capacity to process your queries. So, many data analysts can run their queries without the risk of bringing down the backend database.

Pre-aggregated tables handle some types of queries much faster than the original tables.

Flat tables are pre-joined and they too could be faster for some queries.

Some secondary databases are organized in such a way that they are more understandable to humans. They can also have better performance characteristics, but that’s two separate concerns.

So, write down why this secondary database was created and why it’s maintained. (And why you pay for it.)

Here you can notice potential for some optimizations. For example, you may find that the original purpose of this database is less relevant now, or that there are better ways today to achieve the same goal.

Also, you may re-confirm that this database is still useful, and that’ll make you appreciate it again.

You could add another column to our list, “estimated cost”. Find out how much you spend on this particular data source, in actual dollars. Then check if you can make this spending more efficient by putting more load on this data source, make it do more and extract more value.

Q4. How is it designed?

The answer to this question should be quite short. Write down a few words on the general design, if there is one. For example:

flat tables;

pre-rendered JSON tables;

anchor modeling/6NF.

Often the only thing that you could say here is something like “ad-hoc” or “tables as usual”. That’s fine. But if this data source worries you for some reason, and you have only a vague answer to this question, it may be a strong signal that you may want to dig deeper.

Q5. Where is it documented?

Here is a good place to put a link to your data catalog.

If this data source is maintained by some code — add a link to this code and its documentation.

This question is very close to the previous question, “how is it designed?”. We feel that this needs to be two different questions, however.

The previous question allows you to quickly estimate how much attention this data source needs. If it’s something simple and well-defined then you probably don’t need to worry. If it stands out as something unexpected, then you may need to find out why this discrepancy exists.

This question, on the other hand, lets you dig much deeper.

Customizing

This method of documenting the data sources is extensible. Whatever is your concern about some aspect of your data processing system, just add more columns as needed. For example:

Yearly cost of this data source;

Disk space occupied;

Number of servers, CPUs and memory;

Owning team;

Backup and recovery strategy;

Implementation reliability;

Etc., etc.

Another thing to notice: this list can be as comprehensive as you need. You add only the data sources that you care about in this context. Your company’s data processing system may just be too big, and you may be interested in some subset. Create the list, use it to discuss a topic at hand, and discard it.

Of course, the other approach is also valid. This approach is as collaborative as you need it to be. Create a shared master list of data sources and strive to make it as complete as possible. Share the spreadsheet, let people add new rows and columns, encourage everyone to fix the wrong information and fill in the missing pieces.

Summary

I’ve shown a simple method to describe all sorts of data sources that are used in your company. Use a spreadsheet, one data source per row. Answer just five main questions about each data source:

Q1. Where is it stored?

Q2. Is it a copy of another dataset?

Q3. Why did we decide to create it?

Q4. How is it designed?

Q5. Where is it documented?

Add more questions as needed.

Each row in the spreadsheet is a data source. One data source may include more than one database if they are similar; one physical database may contain different data sources (e.g., primary and secondary data). Include third-party data sources.